Make AI Infrastructure Usable

AI infrastructure hasn’t caught up to AI ambition. Enterprises are investing heavily in GPUs and cloud resources, but much of that infrastructure sits idle. Without a scalable way to manage and deliver it to teams, innovation stalls — and costs spiral.

Enter the Rafay Platform: Easily manage the underlying AI infrastructure and AI/ML tooling data scientists need to innovate faster, with guardrails included. Scale AI/ML workloads across public and on-prem environments.

Consume Compute On Your Terms

Customers leverage the Rafay Platform to orchestrate multi-tenant consumption of AI infrastructure along with AI platforms and applications such as AI-Models-as-a-Service, Accenture's AI Refinery, and other 3rd-party applications.

Consume the Rafay Platform as a SaaS

The SaaS model helps Rafay customers start AI application delivery immediately. It's SOC-2 Type compliant and will address all requirements put forward by internal security teams.

Consume the Rafay Platform across data center and CSP environments

Whether deploying GPUs in multiple colos, or leasing/renting GPUs in a CSP environment, or both, Rafay can help. All compute across all private and CSP environments can be managed as a single pool of GPUs and CPUs, reducing operational overhead and enabling cloud-bursting use cases.

Consume the Rafay Platform in an air-gapped model

For highly-regulated industries, the air-gapped controller model can help deploy the Rafay Platform in a data center or in private/public cloud environments. Customers get the same SaaS-like experience on their terms.

The Future of AI Infrastructure Innovation is Bright

The Rafay Platform offers unparalleled scalability, allowing teams to effortlessly adjust resources based on-demand. With advanced performance optimization features, AI workloads run faster and more efficiently than ever. Plus, the cost-monitoring features ensure team leads can maximize their ROI on GPUs, while minimizing operational expenses.

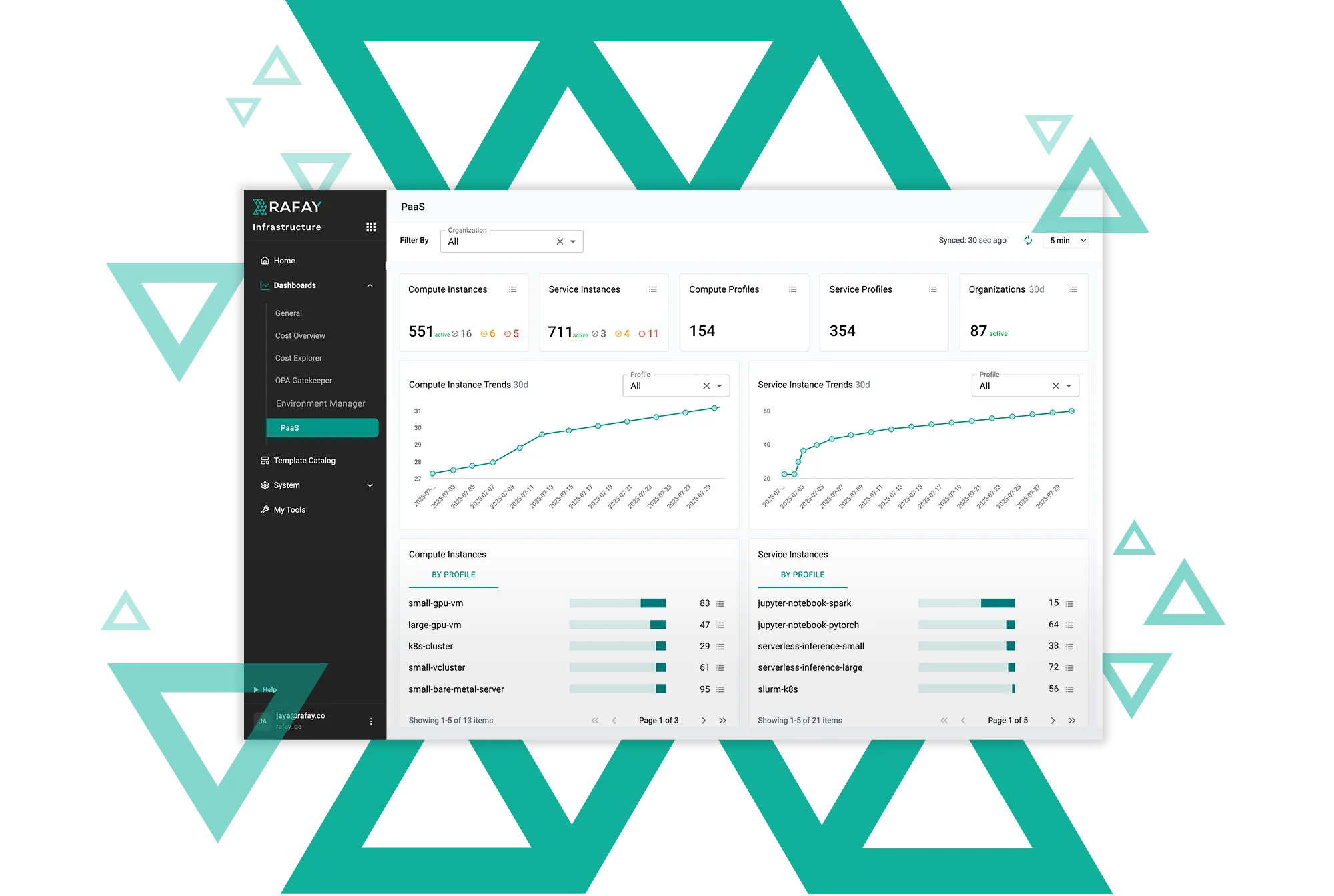

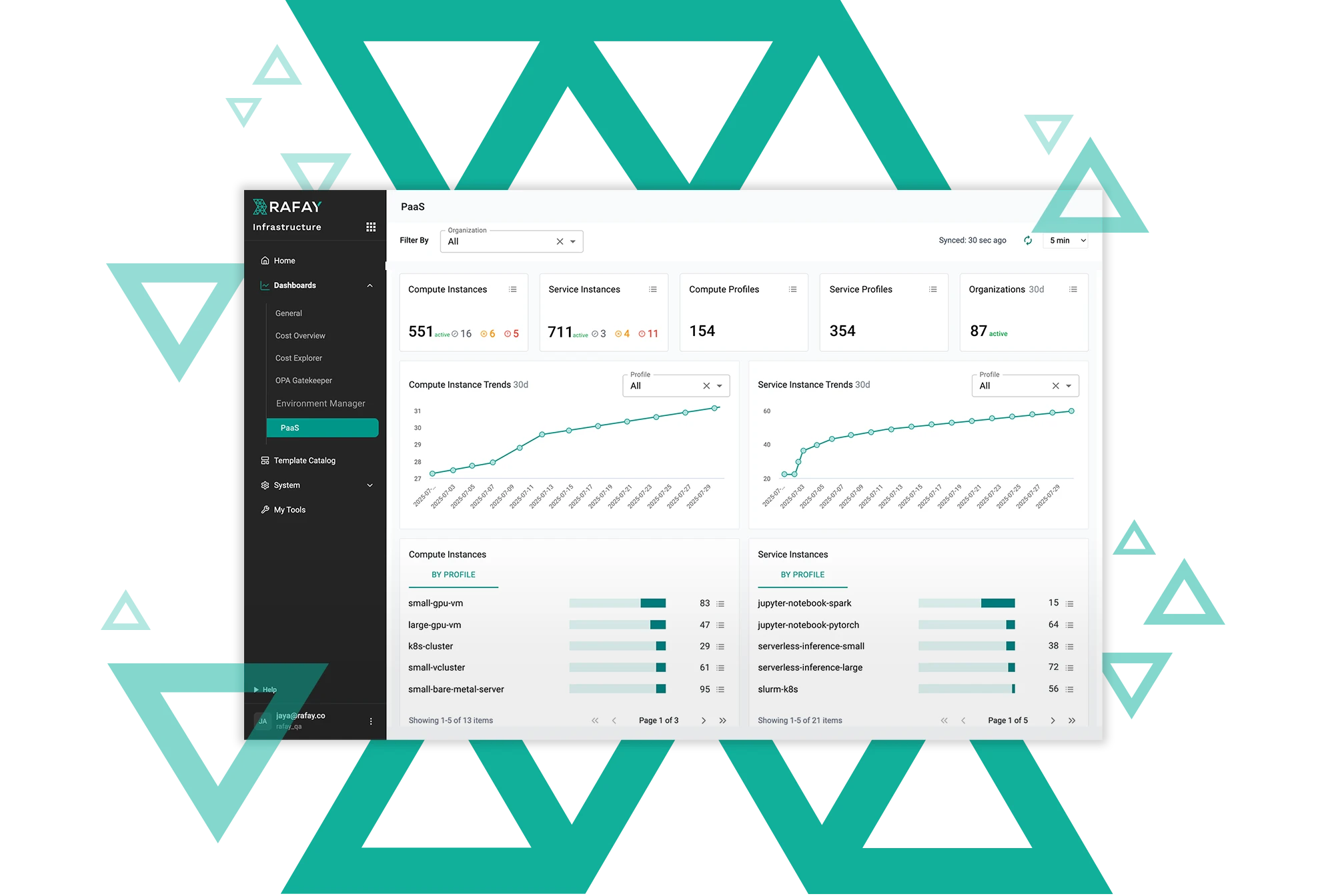

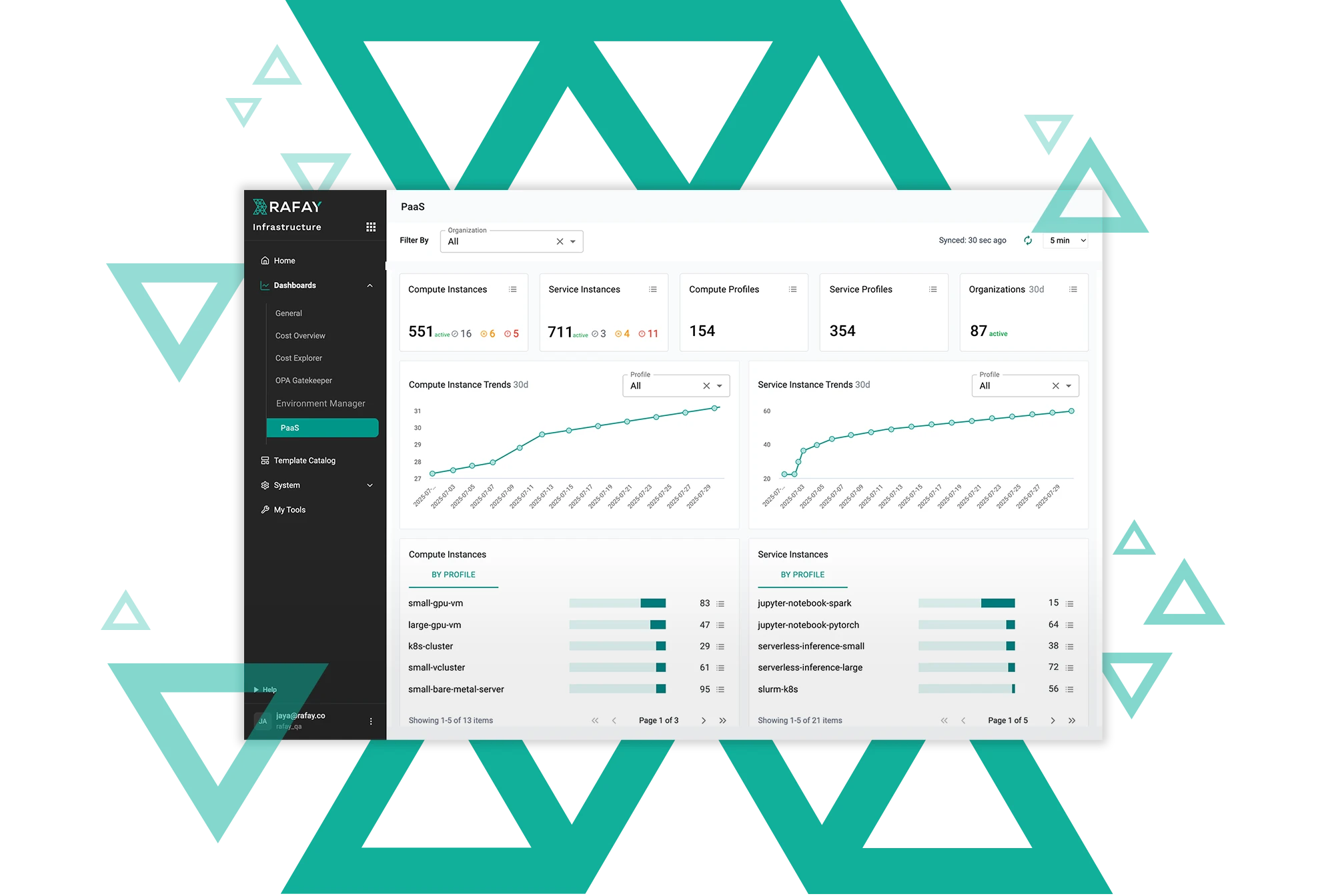

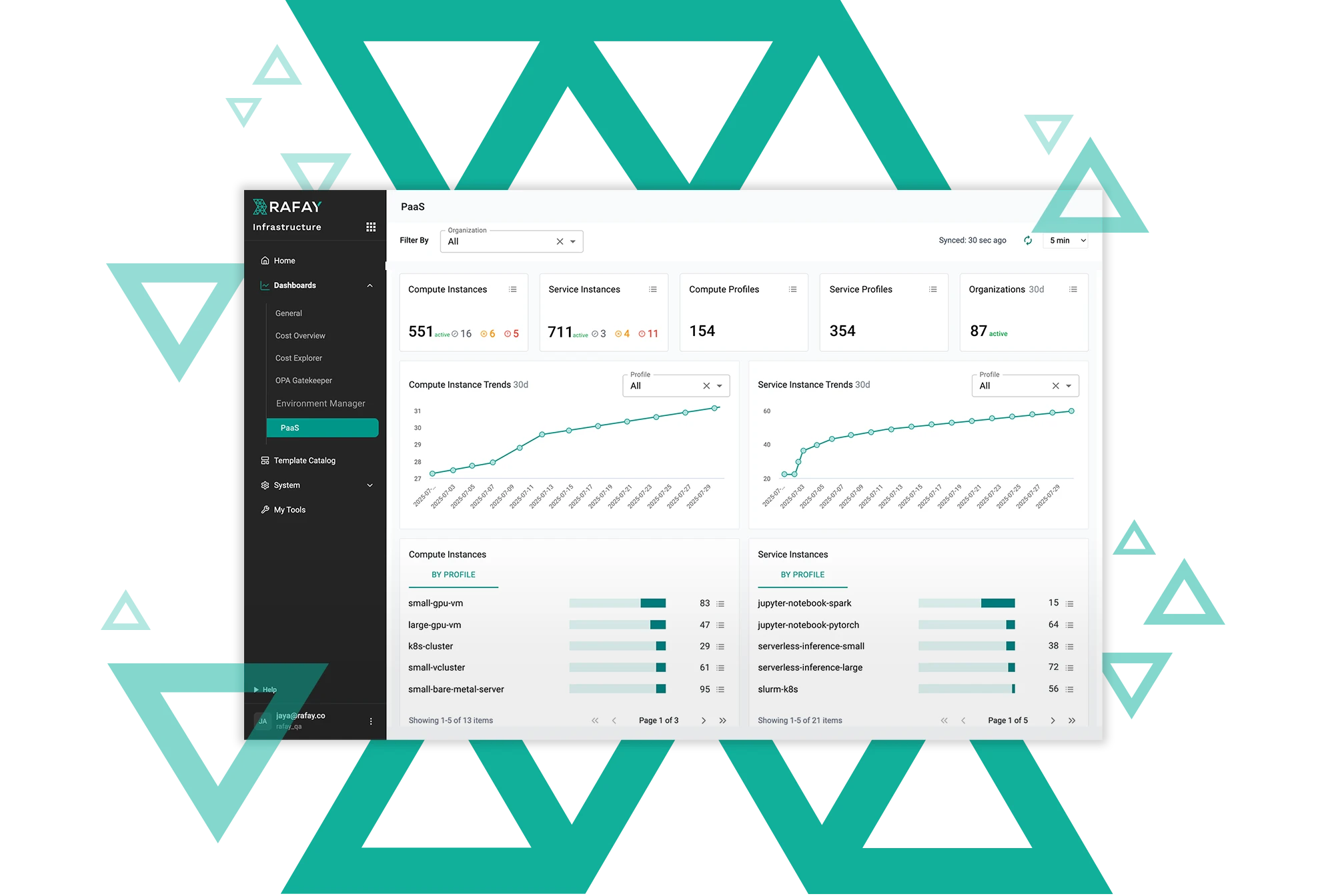

Scale Self-Service Compute Consumption with Confidence

With Rafay, enterprises and service providers deliver self-service experience across public clouds and data center environments. Developers get self-service access to compute and tooling needed to move fast and experiment, while platform teams maintain full control, governance, and cost-efficiency.

Accelerated Computing Infrastructure Management

With a vast library of Generative AI, compute consumption and infrastructure management built in, enterprises and service providers can deliver “as a Service” experiences at each layer of the stack without investing in massive teams and multiple quarters.

From GPU-as-a-Service to AI-as-a-Service

Cloud providers, neoclouds and Sovereign AI clouds who have partnered with Rafay are leading the charge to deliver CSP-grade use cases to their user communities. From agentic applications, ML workbenches, models as a service, to highly tuned virtual machines, K8s clusters and baremetal servers, Rafay is fast becoming the global partner of choice for the most innovative GPU providers in the world.

Trusted by leading enterprises, neoclouds and service providers

Focus on AI Innovation, Not Infrastructure

Experience unparalleled performance and scalability with the Rafay Platform GPU PaaS™ stack. Simplify AI infrastructure management and application delivery while reducing operational costs and enhancing productivity. The solution supports traditional and LLM-based (GenAI) models and offers users ways to efficiently use GPU resources with capabilities like GPU matchmaking, virtualization, and time-slicing–saving customers money and time-to-production.

Questions and answers about GPU PaaS™ for Enterprises

Find answers to common questions about our enterprise solutions and how they can benefit you.

Yes. GPU and Sovereign Cloud providers can choose to offer fractional GPUs to end users in a self-service fashion. The Rafay Platform will take care of security, compute isolation and chargeback data collection.

Yes. The Rafay Platform offers a variety of workbenches out of the box. These are based on Kubeflow and KubeRay, with end users consuming these platforms “as a service,” without needing to configure or operate any of these tools on their own. Further, the Rafay platform provides a low-code/no-code framework that empowers partners to bring new capabilities to market faster, e.g. verticalized agents, co-pilots, document translation services, and more.

Yes. The Rafay Platform has always supported CPU-based workloads and can easily deliver a PaaS experience that offers CPU+GPU instances to end users.

Rafay offers a comprehensive solution for chargebacks and billing. The platform collects granular chargeback information on resource usage, which can be easily exported to customers’ existing billing systems for further processing and distribution. Rafay allows for customizable chargeback group definitions to align with organizational structures or projects. Both group definition and data collection can be carried out programmatically, enabling efficient and accurate billing processes.

Yes. Rafay supports a number of IaC frameworks, enabling customers to programmatize every aspect of their cloud. The Platform supports Terraform, OpenTofu, GitOps pipelines, CLI and API workflows out of the box.

The Definitive GPU PaaS Reference Architecture

Understand what it takes to deliver the right GPU infrastructure to your business.