Building AI Value within Borders

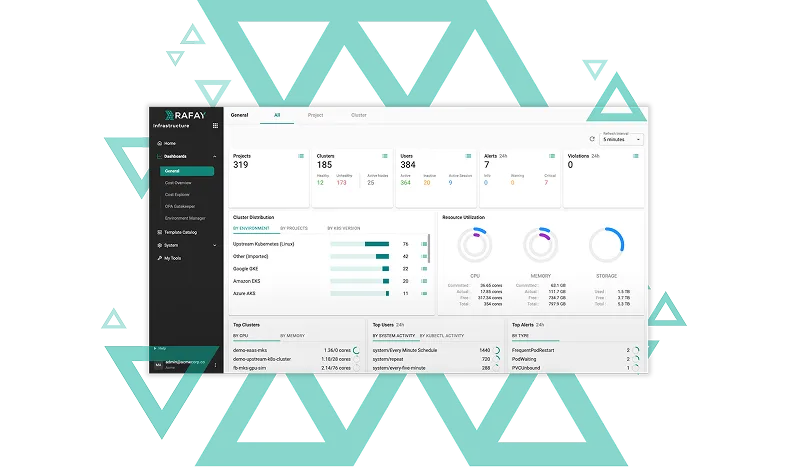

Rafay's central orchestration platform facilitates efficient, self-service infrastructure and AI application management.

The Rafay Platform’s multi-tenant foundation speeds up innovation through faster experimentation, faster shipment of new capabilities and features, reduction in infrastructure waste, increased utilization and the manifestation of better business outcomes. With Rafay, platform engineering teams deliver self-service consumption to developers and data scientists, enforce governance and control across hybrid environments, and keep infrastructure costs low.

.webp)

Most enterprises face a trade-off: meet strict compliance requirements or move fast. The Rafay Platform makes both possible. With air-gapped deployments, multi-tenancy, and centrally governed self-service environments, enterprises can securely operationalize AI workloads in their own data centers—while still giving internal teams the agility of a modern cloud experience.

Learn More

Workloads in the public cloud create new challenges: spiking infrastructure costs, governance gaps, and operational drag. The Rafay Platform abstracts away the complexity, giving platform teams a single pane of glass to orchestrate, govern, and optimize AI/ML infrastructure across clouds. Enterprises cut costs, reduce overhead, and give developers the freedom to innovate faster.

Learn More

A majority of Rafay customers consume Rafay in a SaaS form factor. Why? Because the SaaS model lets them start immediately with the Rafay Platform and deliver value to their customers. The Rafay platform is SOC-2 Type compliant, and will address all requirements put forward by your security team.

Customers in highly regulated industries prefer Rafay’s air-gapped controller model. Team Rafay is ready to help you deploy the Rafay Platform in your data center or in your private/public cloud environment. You get exactly the same experience and all the same features available to our SaaS customers.

Whether you plan to deploy GPUs in multiple colos, or lease GPUs in a CSP environment, or both, Rafay can help. With Rafay, all your compute across all private and CSP environments can be managed as a single pool of GPUs and CPUs, reducing operational overhead and enabling cloud-bursting use cases.

Find answers to common questions about our GPU Cloud Orchestration services below.

Yes. GPU and Sovereign Cloud providers can choose to offer fractional GPUs to end users in a self-service fashion. The Rafay Platform will take care of security, compute isolation and chargeback data collection.

Yes. The Rafay Platform offers a variety of workbenches out of the box. These are based on Kubeflow and KubeRay, with end users consuming these platforms “as a service,” without needing to configure or operate any of these tools on their own. Further, the Rafay platform provides a low-code/no-code framework that empowers partners to bring new capabilities to market faster, e.g. verticalized agents, co-pilots, document translation services, and more.

Yes. The Rafay Platform has always supported CPU-based workloads and can easily deliver a PaaS experience that offers CPU+GPU instances to end users.

Rafay offers a comprehensive solution for chargebacks and billing. The platform collects granular chargeback information on resource usage, which can be easily exported to customers’ existing billing systems for further processing and distribution. Rafay allows for customizable chargeback group definitions to align with organizational structures or projects. Both group definition and data collection can be carried out programmatically, enabling efficient and accurate billing processes.

Yes. Rafay supports a number of IaC frameworks, enabling customers to programmatize every aspect of their cloud. The Platform supports Terraform, OpenTofu, GitOps pipelines, CLI and API workflows out of the box.

This paper explores the key challenges that organizations experience supporting these initiatives, as well as best practices for successfully leveraging Kubernetes to accelerate AI/ML projects.

.png)