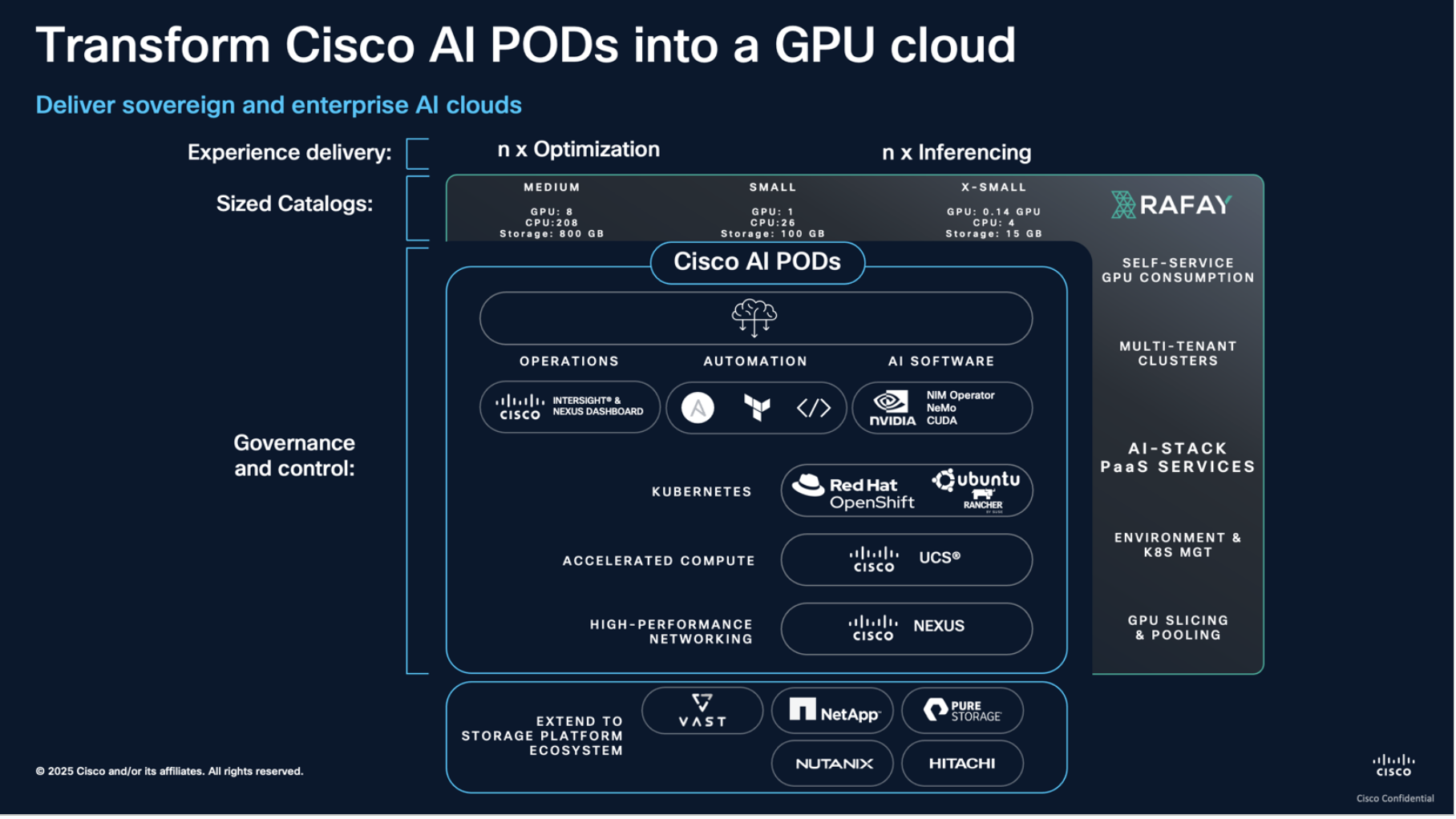

Unlock the Next Step: From Cisco AI PODs to Self-service GPU Clouds with Rafay

This blog draws on insights from the new whitepaper Transform Cisco AI PODs into a Self-service GPU Cloud, which explores how Cisco and Rafay together enable organizations to operationalize AI faster.

Enterprises, sovereign organizations, and service providers are making bold moves into AI. Cisco AI PODs already provide a powerful, pre-validated foundation for deploying AI infrastructure at scale. They bring together compute, storage, and networking in a modular design that simplifies procurement and deployment. The next step is unlocking this foundation, making AI infrastructure consumable as a service.

This is where Rafay complements Cisco AI PODs. Rafay’s GPU Platform as a Service adds the critical consumption layer, turning the hardware into a governed, self-service GPU cloud. Together, Cisco and Rafay enable organizations to operationalize AI faster by offering secure, multi-tenant access, standardized workload SKUs, and policy-driven governance.

From Infrastructure to Consumption

Cisco AI PODs bring the performance and security required for enterprise AI. They are built to support workloads like generative AI, retrieval-augmented generation, and large-scale inferencing. However, deploying hardware is only part of the journey. IT and platform teams must balance multiple requests, enforce access controls, and maintain consistency across environments. Without the right operating model, this can lead to ticket queues, duplicated work, and bottlenecks.

A Solution That Fits Every Customer

This solution is purpose-built for a diverse set of customers who need to operationalize GPU infrastructure for AI.

- Enterprise IT teams

Gain federated self-service, quota enforcement, and centralized visibility. Reduce infrastructure duplication, improve developer agility, and embed governance into daily operations. - Sovereign and public sector organizations

Meet compliance needs with secure multitenancy, policy enforcement, and centralized audit logging. Support both IT and research divisions in air-gapped environments without drift or rework. - Cloud Service Providers (CSPs) and Managed Service Providers (MSPs)

Monetize GPU infrastructure with multitenant platforms, white-labeled developer portals, automated tenant onboarding, and built-in chargeback metering. - Existing Cisco customers

Extend ROI of current deployments by adding GPU orchestration as an overlay, with no re-architecture or downtime required. - Greenfield AI builders

Start fresh with pre-validated AI PODs and orchestration, reducing time from procurement to operational AI services from months to weeks.

Operationalizing AI with Confidence

Pairing Cisco AI PODs with Rafay GPU PaaS gives organizations a pathway to operationalize AI with confidence. Infrastructure becomes service-ready, developers gain speed without losing guardrails, and operators ensure governance, quotas, and observability at every layer.

To dive deeper into how this joint solution works and who it benefits, access the full whitepaper: Transform Cisco AI PODs into a Self-service GPU Cloud.

Cisco and Rafay are hosting a live webinar on October 21, 2025, at 8 AM PST, where Cisco and Rafay experts will demonstrate how the Cisco AI PODs and Rafay GPU PaaS together enable organizations to transform infrastructure into production-ready AI services in weeks.