What is JupyterHub? A Beginner’s Guide

This is part of a blog series on AI/Machine Learning. In the previous blog, we discussed Jupyter Notebooks, how they are different and the challenges organizations run into at scale with it. In this blog, we will look at how organizations can use JupyterHub to take to provide access to Jupyter notebooks as a centralized service for their data scientists.

Why Not Standalone Jupyter Notebooks?

In the last blog, we summarized the issues that data scientists have to struggle with if they use standalone Jupyter notebooks. Let's review them again.

Installation & Configuration

Although data scientists can download, configure and use Jupyter notebooks on their laptops, this approach is not a very effective and scalable approach for organizations because it requires every data scientist to become an expert on and perform the following:

- Spend time downloading and installing the correct versions of Python

- Create virtual environments, troubleshooting installs,

- Deal with system vs. non-system versions of Python,

- Install packages, dealing with folder organization

- Understand the difference between conda and pip,

- Learn various command-line commands

- Understand differences between Python on Windows vs macOS

Lack of Standardization

On top of this, there are other limitations that will impact the productivity of data scientists as well:

- Being limited by the compute capabilities of the laptop i.e. no GPU etc.

- Data scientists pursuing Shadow IT for use of compute resources from public clouds

- Constantly download and upload large sets of data

- Unable to access data because of corporate security policies.

- Unable to collaborate (share notebooks etc.) with other data scientists effectively

Note: JupyterHub's goal is to eliminate all these issues and help IT teams deliver Jupyter Notebooks as a managed service for their data scientists.

In a nutshell, with JupyterHub, IT/Ops can provide an experience for data scientists where they just need to login, explore data, write Python code. They do not have to worry about installing software on their local machine and can get access to a consistent, standardized and powerful environment to do their job.

JupyterHub Requirements for IT/Ops

JupyterHub is a Kubernetes native application and needs to be deployed and operated by IT/Ops in any environment (public/private cloud) where Kubernetes can be made available. Although a Helm chart for JupyterHub is available, IT/Ops needs to do a whole lot more to operationalize it in their organization. Let's explore these requirements in greater detail.

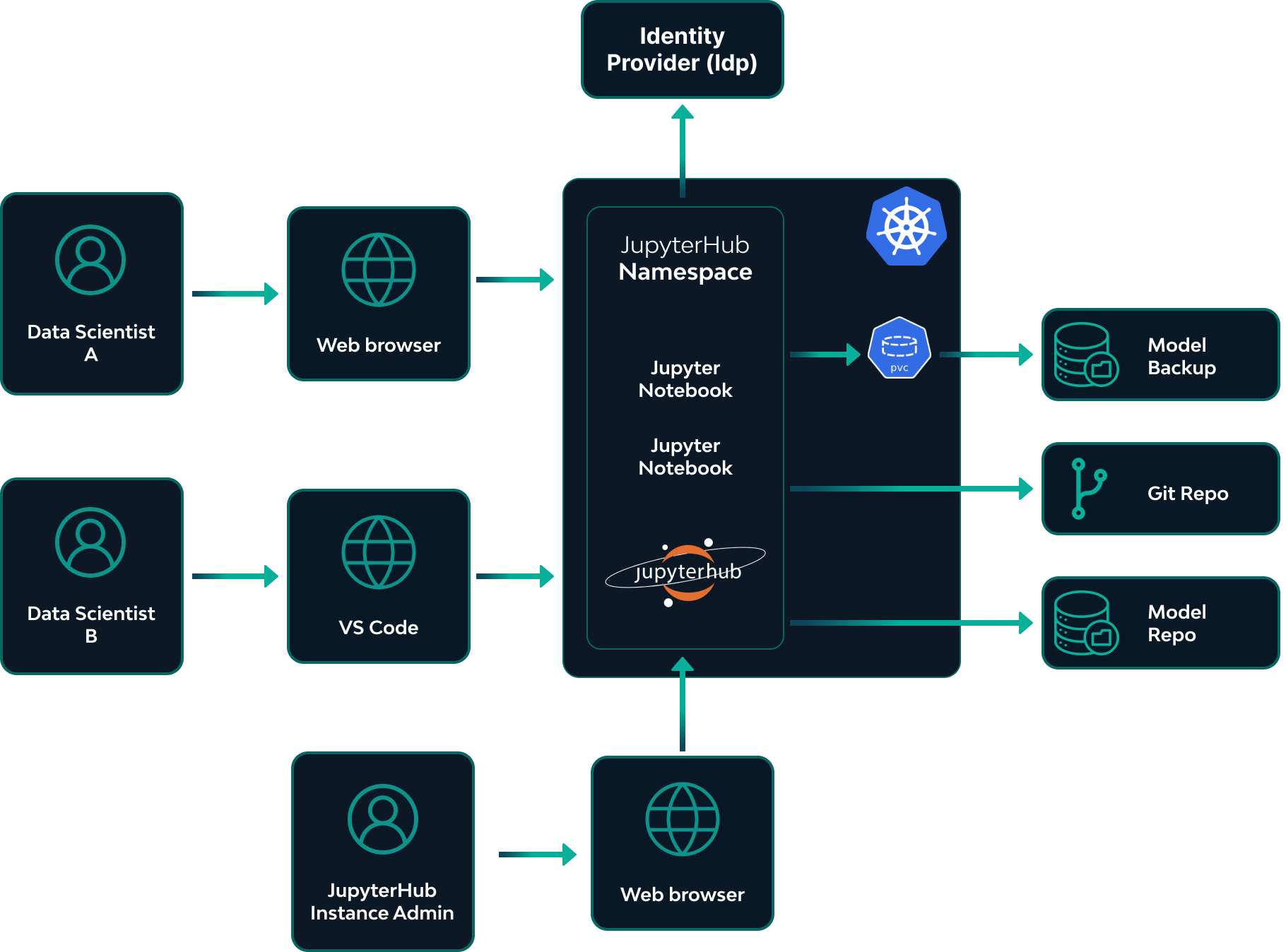

The diagram below shows what a typical JupyterHub environment managed by IT/Ops would look like at steady state.

Rafay's Templates for AI/GenAI

The Rafay team has been assisting many of our customers operationalize JupyterHub for their data scientists. We have packaged the best practices, security and streamlined the entire deployment process so that IT/Ops can save a significant amount of time and money. The entire process is now a 1-click deployment experience for IT/Ops and they are now starting to offer this to their data scientist teams as a self service experience.Learn more about Rafay's template for JupyterHub

Blog Ideas

Sincere thanks to readers of our blog who spend time reading our product blogs. This blog was authored because we are working with several customers that are expanding their use of Jupyter notebooks on Kubernetes and AWS Sagemaker using the Rafay Platform. Please contact us if you would like us to write about other topics.