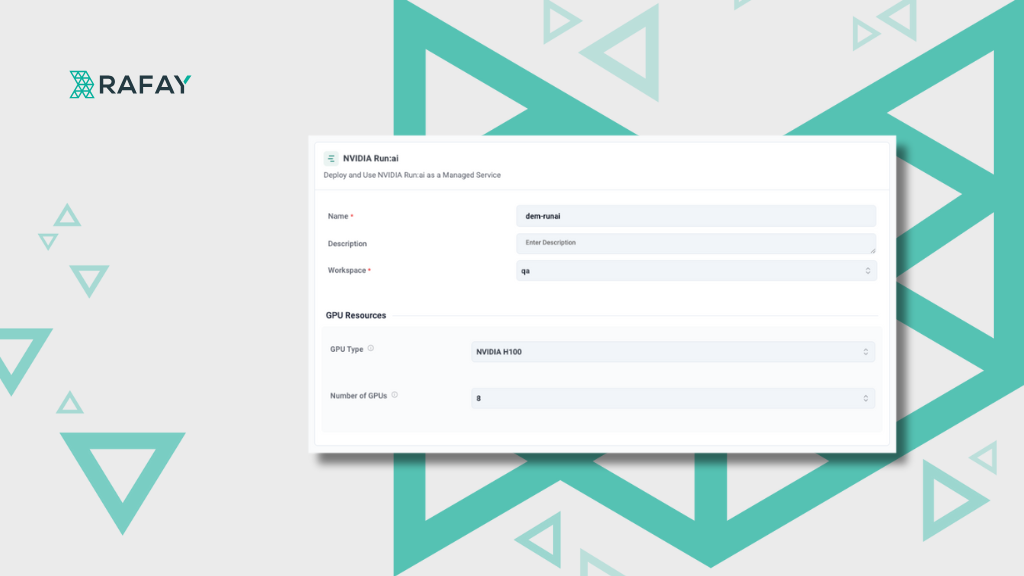

How GPU Clouds Deliver NVIDIA Run:ai as Self-Service with Rafay GPU PaaS

Learn how Rafay GPU PaaS enables GPU Clouds to offer NVIDIA Run:ai as a fully automated, multi-tenant managed service delivered through self-service with lifecycle management and turnkey deployment.

Read Now

.png)