ArgoCD Reconciliation Explained: How It Works and Why It Matters

ArgoCD is a powerful GitOps controller for Kubernetes, enabling declarative configuration and automated synchronization of workloads.

Read Now

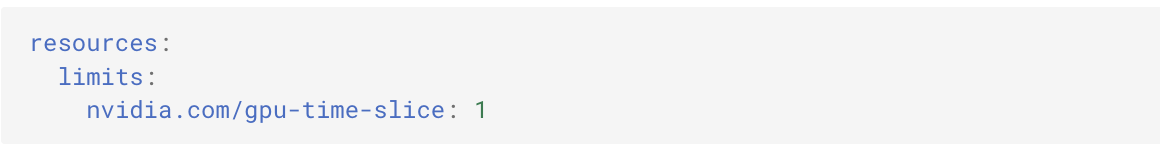

In the modern era of containerized machine learning and AI infrastructure, GPUs are a critical and expensive asset. Kubernetes makes scheduling and isolation easier—but managing GPU utilization efficiently requires more than just assigning something like

In this blog post, we will explore what custom GPU resource classes are, why they matter, and when to use them for maximum impact. Custom GPU resource classes are a powerful technique for fine-grained GPU management in multi-tenant, cost-sensitive, and performance-critical environments.

If you are new to GPU sharing approaches, we recommend reading the following introductory blogs: Demystifying Fractional GPUs in Kubernetes and Choosing the Right Fractional GPU Strategy.

By default, Kubernetes exposes GPUs through a single resource name: nvidia.com/gpu. As an end user, you have no idea how the underlying GPU is setup and configured. For example, the GPU type you will use may fall into one of the following:

Custom resource classes allow administrators to define new GPU resource names that are more obvious and apparent for users. These names are configured by the GPU device plugin (typically via the NVIDIA GPU Operator) and allow you to expose multiple logical GPU types from the same physical hardware.

Some examples are shown below.

As the custom resource class name suggests, this is a time sliced GPU

As the custom resource class name suggests, this is a MIG GPU instance with 1g.5gb of memory.

As the custom resource class name suggests, this is a fractional (0.25) GPU

We sometimes get asked by customers as to why does custom resource classes matter? Here are some common reasons we can think of:

Different workloads can have vastly different GPU requirements. For example,

In shared environments such as internal ML platforms, GPU clouds, or research clusters, custom classes allow administrators to achieve the following:

As we all know, GPU costs add up quickly. Using full GPUs for lightweight jobs is inefficient. Custom classes enable the following:

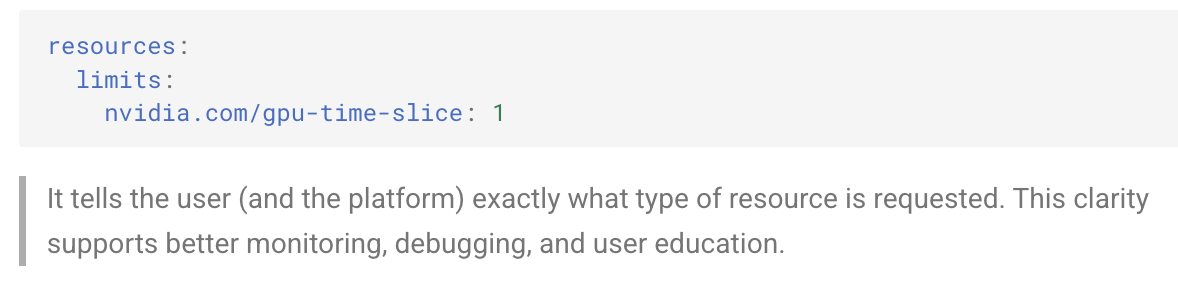

Custom resource names make GPU usage explicit in YAMLs and dashboards. For example, when you use the following YAML

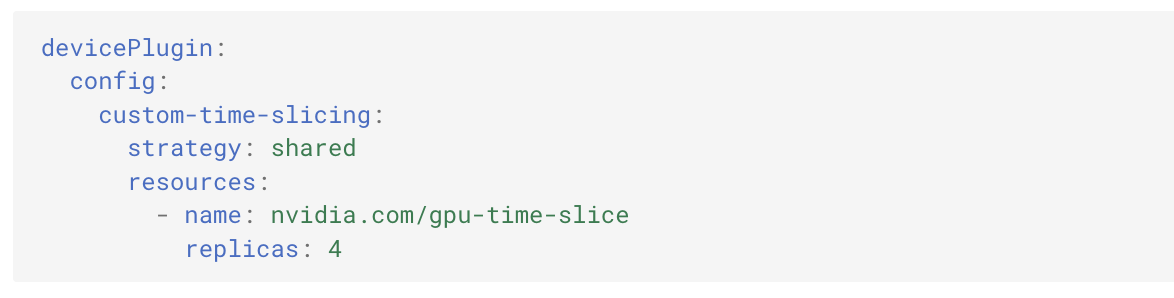

Custom resource classes need to be defined in the NVIDIA GPU Operator’s Helm values.yaml file. You can use an override such as the following:

In this example, the configuration exposes each physical GPU as 4 logical time-sliced units. Users can then request for a time sliced unit with the following YAML :

Custom GPU resource classes offer the flexibility, cost-efficiency, and isolation required for scalable and sustainable GPU operations in Kubernetes. Whether you’re a platform engineer, ML researcher, or infrastructure architect, adopting this pattern can dramatically improve your cluster’s GPU utilization and user experience.

ArgoCD is a powerful GitOps controller for Kubernetes, enabling declarative configuration and automated synchronization of workloads.

Read Now

As demand for GPU-accelerated workloads soars across industries, cloud providers are under increasing pressure to offer flexible, cost-efficient, and isolated access to GPUs.

Read Now

As GPU acceleration becomes central to modern AI/ML workloads, Kubernetes has emerged as the orchestration platform of choice.

Read Now