What is Multi-Tenancy? A Guide to Multi-Tenant Architecture

Multitenancy is a model where teams share infrastructure while keeping data separate. Rafay delivers secure, scalable multi-tenant Kubernetes management.

Read Now

As GPU acceleration becomes central to modern AI/ML workloads, Kubernetes has emerged as the orchestration platform of choice. However, allocating full GPUs for many real-world workloads is an overkill resulting in under utilization and soaring costs.

Enter the need for fractional GPUs: ways to share a physical GPU among multiple containers without compromising performance or isolation.

In this post, we’ll walk through three approaches to achieve fractional GPU access in Kubernetes:

For each, we’ll break down how it works, its pros and cons, and when to use it.

MIG (Multi-Instance GPU) is a hardware-level GPU partitioning feature introduced by NVIDIA for Ampere and later architectures (e.g., A100, L40). MIG allows you to divide a single GPU into multiple isolated GPU instances, each with dedicated compute cores, memory, and cache

We have documented how to configure and use MIG here and here.

Time slicing uses the GPU’s driver and runtime to context switch between multiple containers, letting them take turns using the same physical GPU. This is typically done using a Shared strategy in NVIDIA GPU Operator.

We have documented how to configure and use Time Slicing here.

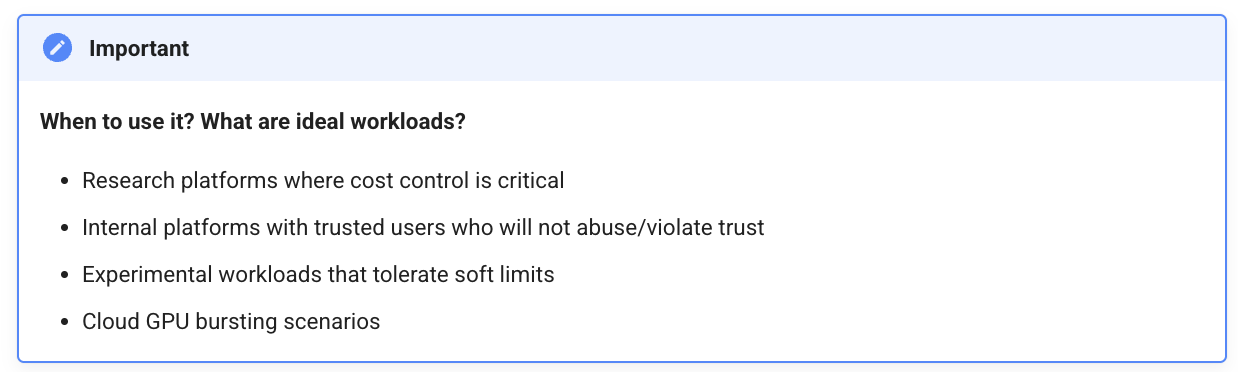

Custom k8s schedulers like KAI Scheduler introduce the concept of logical fractional GPUs by managing scheduling logic independently of the standard Kubernetes device plugin. These approaches inject GPU awareness into the scheduler and use custom plugins to expose fractional resources (e.g., 0.25 GPU per pod).

We have documented how to configure and use KAI Scheduler here.

In the table below, we have summarized the three approaches.

To make things extremely simple, we recommend you use the table below to quickly determine the most appropriate fractional strategy.

Unfortunately, as you can see from this blog, there is no one size fits all for fractional GPU access in Kubernetes. The right approach depends on your hardware, workload type, and tenant isolation needs.

By thoughtfully choosing the right strategy—or combining them—you can significantly increase GPU utilization, reduce cost, and serve a wider variety of users efficiently.

Multitenancy is a model where teams share infrastructure while keeping data separate. Rafay delivers secure, scalable multi-tenant Kubernetes management.

Read Now

.png)

The community Ingress NGINX project is entering end-of-life in March 2026. Discover what this means for Kubernetes users and why you’ll need to migrate, what alternatives exist (Gateway API, Traefik, etc.), and how to plan your transition smoothly with minimal disruption.

Read Now

.png)

This blog details the specific features of the Rafay Platform Version 4.0 Which Further Simplifies Kubernetes Management and Accelerates Cloud-Native Operations for Enterprises and Cloud Providers

Read Now