Bare Metal Isn’t a Business Model: How Cloud Providers Monetize AI Infrastructure

Read Now

.png)

This blog demonstrates how to leverage Dynamic Resource Allocation (DRA) for efficient GPU allocation using Multi-Instance GPU (MIG) strategy on Rafay's Managed Kubernetes Service (MKS) running Kubernetes 1.34.

In our previous blog series, we covered various aspects of Dynamic Resource Allocation (DRA) in Kubernetes:

With Kubernetes 1.34, Dynamic Resource Allocation (DRA) is Generally Available (GA) and enabled by default on MKS clusters. This means you can immediately start using DRA features without additional configuration.

Before we begin, ensure you have:

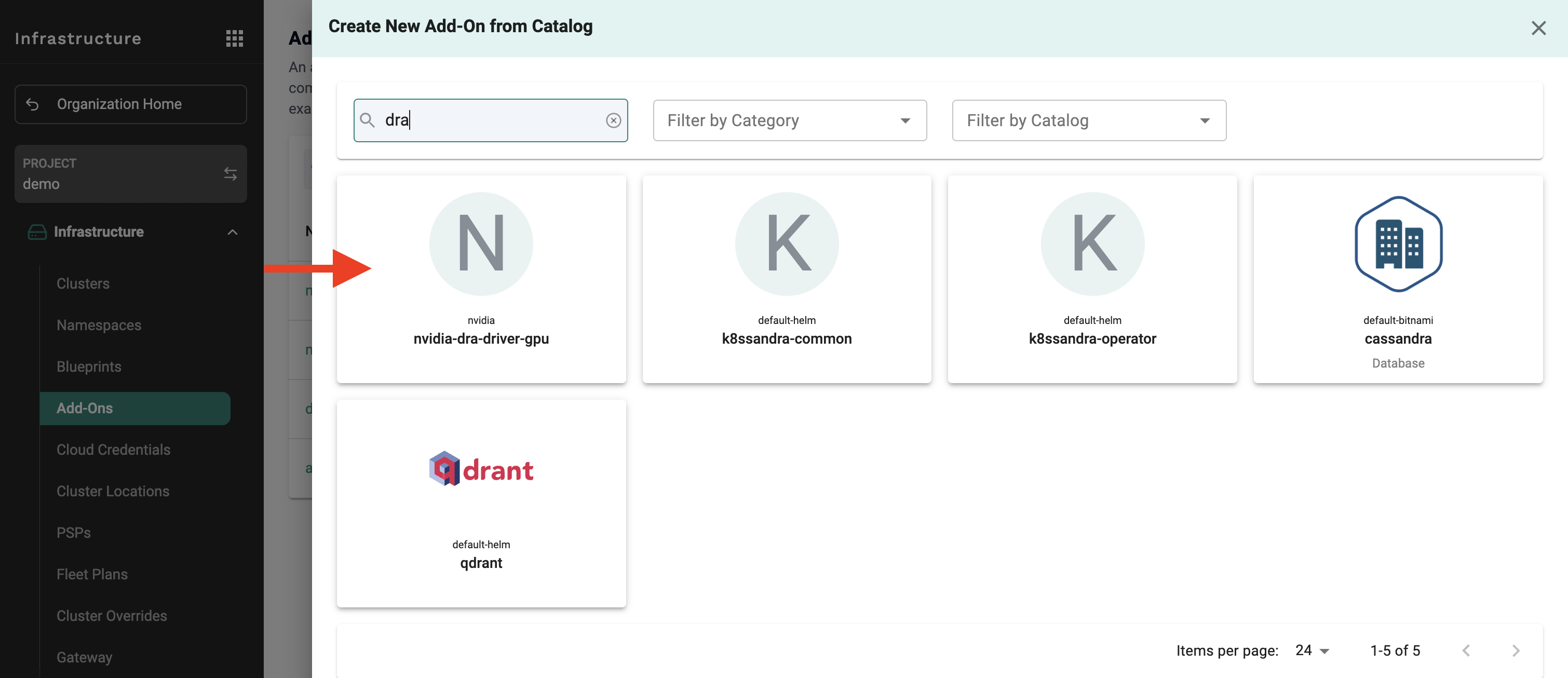

This section walks you through installing the necessary components to enable DRA for GPU allocation on your MKS cluster. We'll use Rafay's blueprint workflow to deploy both the DRA driver and GPU operator.

Before we begin, let's understand what we're installing:

The current DRA driver implementation doesn't include all GPU functionality. Advanced features like MIG management require the GPU operator to be deployed separately. This will be integrated in future DRA releases.

The DRA driver has specific node affinity requirements that determine which nodes it will be deployed to. By default, the DRA kubelet plugin will only be scheduled on nodes that meet one of these criteria:

Default Node Affinity Requirements:

feature.node.kubernetes.io/pci-10de.present=true (NVIDIA PCI vendor ID detected by NFD)feature.node.kubernetes.io/cpu-model.vendor_id=NVIDIA (Tegra-based systems)nvidia.com/gpu.present=true (manually labeled GPU nodes)For this blog, we'll manually label our GPU node:

1. Identify your GPU node:

kubectl get nodes2. Add the required label to your GPU node:

kubectl label node <gpu-node-name> nvidia.com/gpu.present=true3. Verify the label was applied:

kubectl get nodes --show-labels | grep nvidia.com/gpu.present

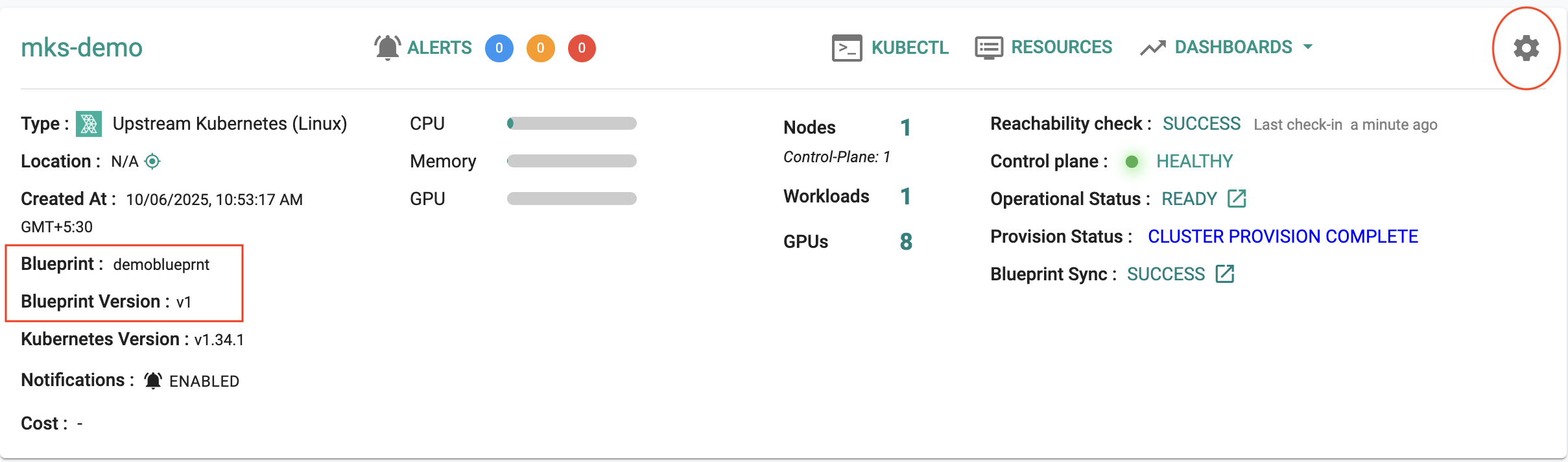

After the blueprint is applied and nodes are properly labeled, verify that the DRA components are running correctly:

Access your cluster using ZTKA (Zero Trust Kubectl Access)

Check DRA controller pods:

kubectl get pods -n kube-system | grep draYou should see two main DRA components:

dra-controller: Manages resource allocation requestsdra-kubelet-plugin: Handles resource allocation on each node

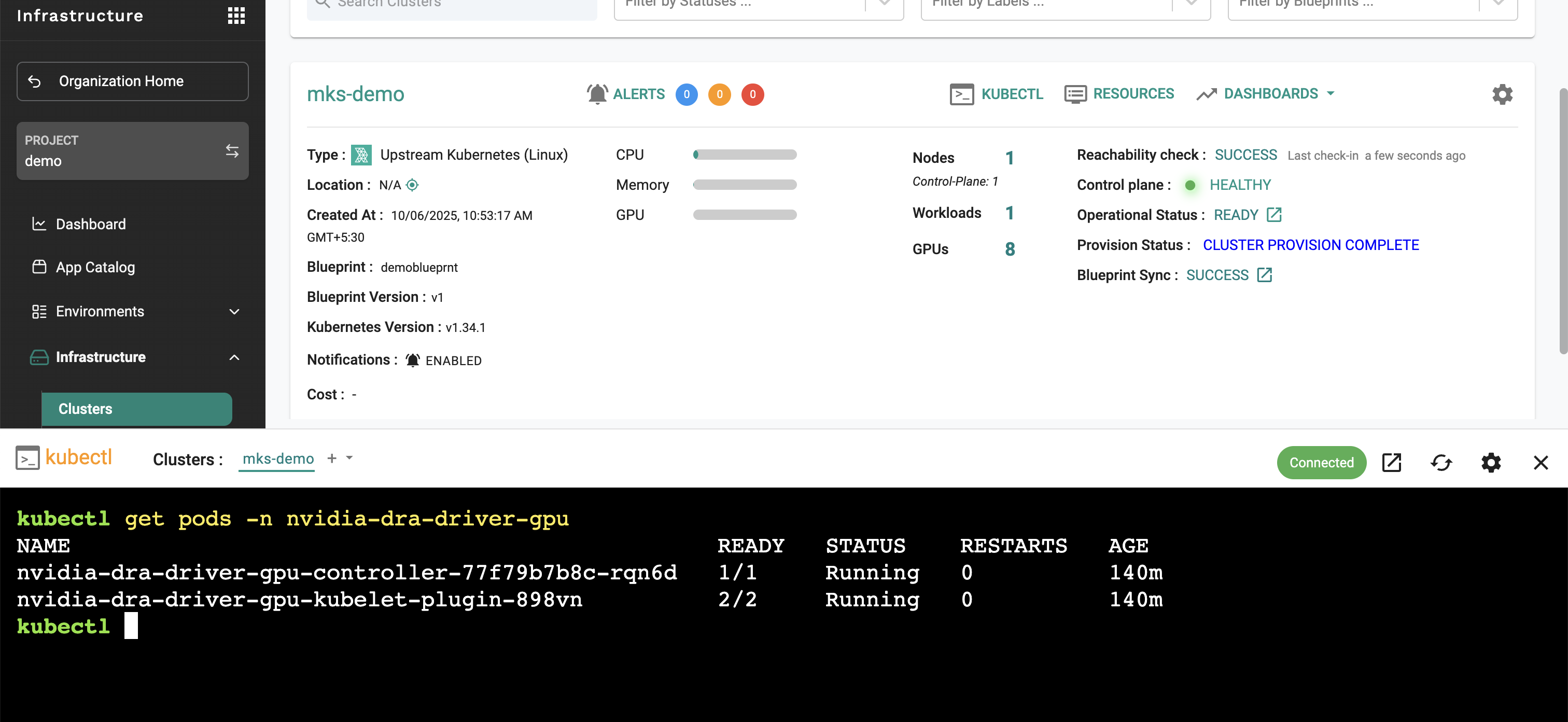

Verify DRA resources are available:

kubectl get deviceclass

kubectl get resourceslicesThis confirms that DRA has created the necessary device classes and resource slices for GPU allocation.

Before deploying workloads, we need to configure MIG on our GPU nodes. MIG allows us to split a single GPU into multiple smaller instances for better resource utilization.

1. Label your GPU nodes to enable MIG with the "all-balanced" configuration

kubectl label nodes <node-name> nvidia.com/mig.config=all-balanced2. Verify MIG is enabled:

kubectl get nodes -l nvidia.com/mig.config=all-balancedThe current DRA driver doesn't support dynamic MIG configuration. You need to statically configure MIG devices using the GPU operator, similar to the traditional device plugin approach.

3. Check available MIG instances:

kubectl describe node <node-name> | grep nvidia.com/migNow let's deploy a workload that demonstrates how to use DRA with MIG for efficient GPU resource allocation.

This example demonstrates how to create a single pod with multiple containers, each requesting different MIG instances from the same physical GPU. This showcases the power of DRA + MIG for efficient resource sharing.

What this example does: - Creates 4 containers in a single pod - Each container requests a different MIG profile - All containers share the same physical GPU through MIG - Uses ResourceClaimTemplate for declarative resource allocation

---

# ResourceClaimTemplate defines the GPU resources we want to allocate

apiVersion: resource.k8s.io/v1beta1

kind: ResourceClaimTemplate

metadata:

namespace: gpu-test4

name: mig-devices

spec:

spec:

devices:

requests:

- name: mig-1g-5gb-0

deviceClassName: mig.nvidia.com

selectors:

- cel:

expression: "device.attributes['gpu.nvidia.com'].profile == '1g.5gb'"

- name: mig-1g-5gb-1

deviceClassName: mig.nvidia.com

selectors:

- cel:

expression: "device.attributes['gpu.nvidia.com'].profile == '1g.5gb'"

- name: mig-2g-10gb

deviceClassName: mig.nvidia.com

selectors:

- cel:

expression: "device.attributes['gpu.nvidia.com'].profile == '2g.10gb'"

- name: mig-3g-20gb

deviceClassName: mig.nvidia.com

selectors:

- cel:

expression: "device.attributes['gpu.nvidia.com'].profile == '3g.20gb'"

constraints:

- requests: []

matchAttribute: "gpu.nvidia.com/parentUUID"

---

# Deployment with multiple containers sharing GPU resources

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: gpu-test4

name: gpu-workload

labels:

app: gpu-workload

spec:

replicas: 2

selector:

matchLabels:

app: gpu-workload

template:

metadata:

labels:

app: gpu-workload

spec:

resourceClaims:

- name: mig-devices

resourceClaimTemplateName: mig-devices

containers:

- name: small-workload-1

image: ubuntu:22.04

command: ["bash", "-c"]

args: ["nvidia-smi -L; trap 'exit 0' TERM; sleep 9999 & wait"]

resources:

claims:

- name: mig-devices

request: mig-1g-5gb-0

- name: small-workload-2

image: ubuntu:22.04

command: ["bash", "-c"]

args: ["nvidia-smi -L; trap 'exit 0' TERM; sleep 9999 & wait"]

resources:

claims:

- name: mig-devices

request: mig-1g-5gb-1

- name: medium-workload

image: ubuntu:22.04

command: ["bash", "-c"]

args: ["nvidia-smi -L; trap 'exit 0' TERM; sleep 9999 & wait"]

resources:

claims:

- name: mig-devices

request: mig-2g-10gb

- name: large-workload

image: ubuntu:22.04

command: ["bash", "-c"]

args: ["nvidia-smi -L; trap 'exit 0' TERM; sleep 9999 & wait"]

resources:

claims:

- name: mig-devices

request: mig-3g-20gb

tolerations:

- key: "nvidia.com/gpu"

operator: "Exists"

effect: "NoSchedule"

1. Create the namespace:

kubectl create namespace gpu-test42. Apply the configuration:

kubectl apply -f gpu-workload.yaml3. Verify the deployment:

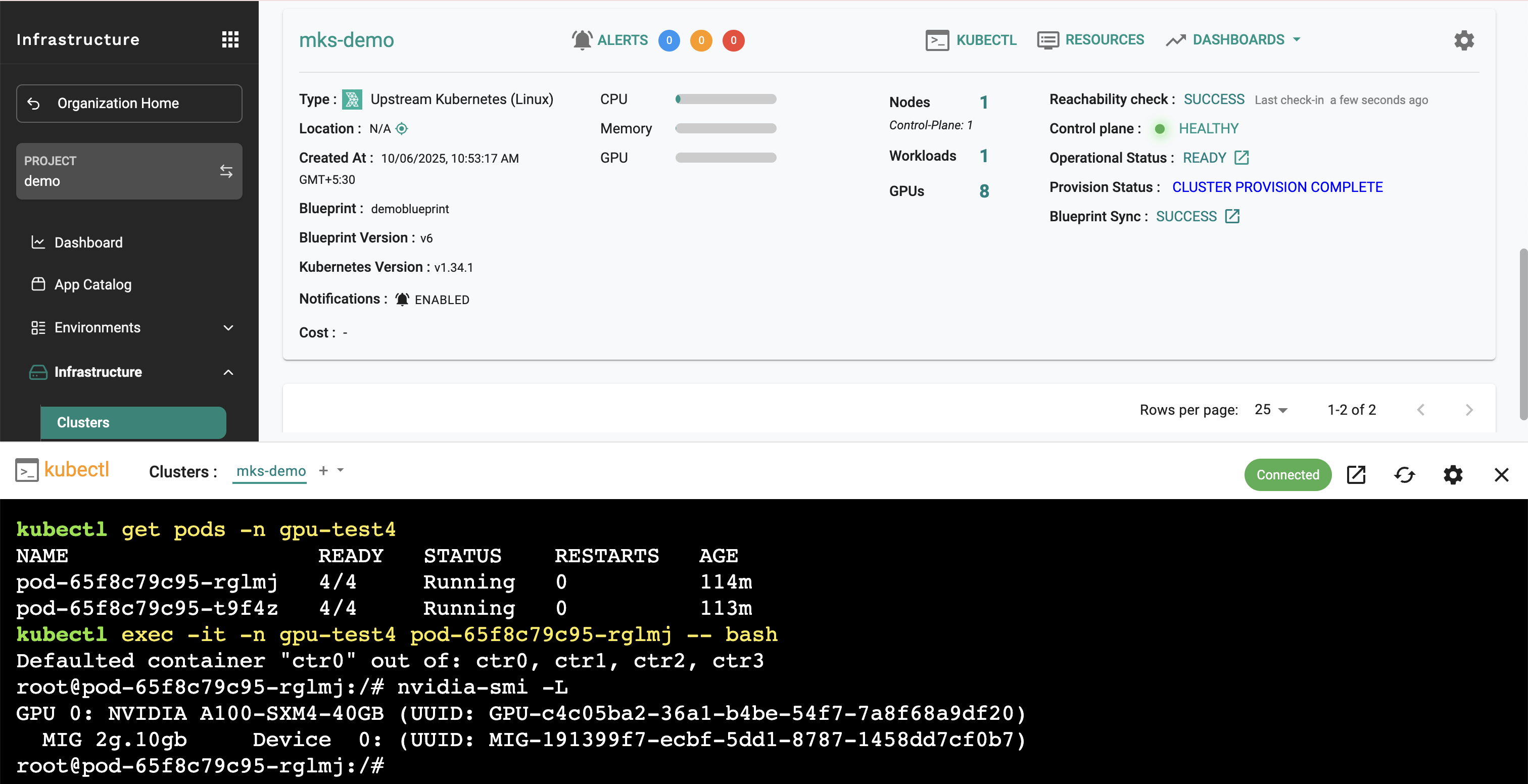

kubectl get pods -n gpu-test4

kubectl get resourceclaims -n gpu-test4

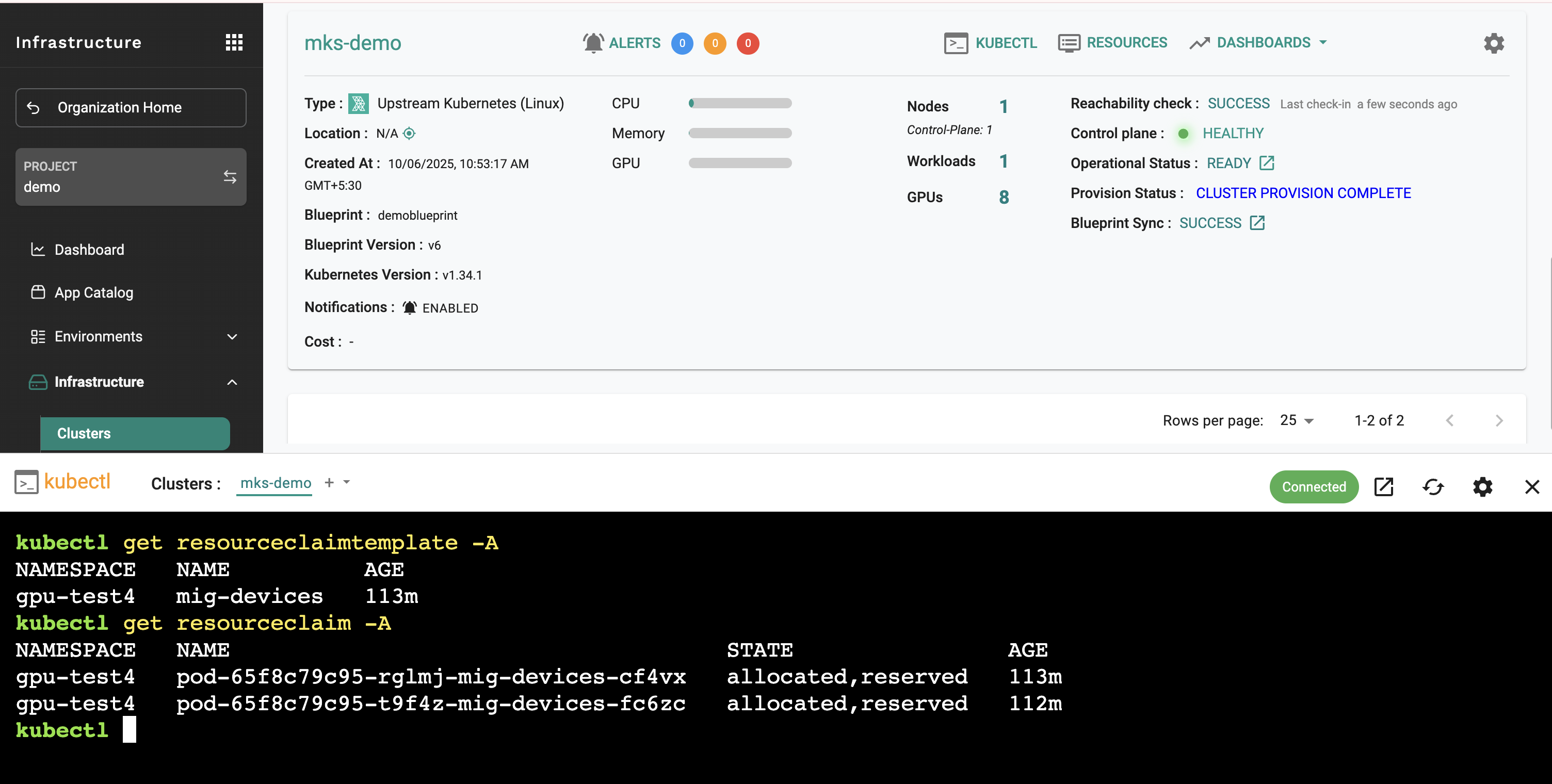

After deploying your workload, it's important to verify that GPU resources are properly allocated and accessible to your containers.

Check ResourceClaims status

kubectl get resourceclaims -n gpu-test4

Execute into a container to test GPU access:

kubectl exec -it -n gpu-test4 <pod-name> -c small-workload-1 -- nvidia-smiIf you don't see the DRA kubelet plugin running on your GPU nodes, check that the node has the required label:

# Check if the label is present

kubectl get nodes --show-labels | grep nvidia.com/gpu.present

# If missing, add the label

kubectl label node <gpu-node-name> nvidia.com/gpu.present=true

The DRA kubelet plugin requires one of these node labels to be scheduled:

nvidia.com/gpu.present=true (manually added)

feature.node.kubernetes.io/pci-10de.present=true (detected by NFD)

feature.node.kubernetes.io/cpu-model.vendor_id=NVIDIA (Tegra systems)

This blog demonstrated how to leverage Dynamic Resource Allocation (DRA) with Multi-Instance GPU (MIG) on Rafay's MKS clusters running Kubernetes 1.34.

With DRA now GA in Kubernetes 1.34 and available on MKS, you can start implementing more efficient GPU resource management strategies for your AI/ML workloads today!

Read Now

.png)

This blog details the specific features of the Rafay Platform Version 4.0 Which Further Simplifies Kubernetes Management and Accelerates Cloud-Native Operations for Enterprises and Cloud Providers

Read Now

In this second blog, we installed a Kuberneres v1.34 cluster and deployed an example DRA driver on it with "simulated GPUs". In this blog, we’ll will deploy a few workloads on the DRA enabled Kubernetes cluster to understand how "Resource Claim" and "ResourceClaimTemplates" work.

Read Now