Support for Parallel Execution with Rafay's Integrated GitOps Pipeline

Read Now

Kubernetes clusters have a lot of moving parts—and so does each application running on a cluster. With frequent application and environment updates, the state of every cluster can change rapidly. Operating at scale—with dozens of clusters and hundreds of application instances—it can be almost impossible to avoid configuration inconsistencies between clusters and configuration mistakes that result in prolonged troubleshooting, downtime, or worse.It’s exactly these types of challenges that have led so many organizations to adopt GitOps, bringing the familiar capabilities of Git tools to infrastructure management and continuous delivery (CD). In last year’s AWS Container Security Survey, a whopping 64.5% of the respondents indicated that they were already using GitOps. That number will undoubtedly grow when this year’s survey is released.This blog takes a look at the GitOps principles and GitOps workflows that make the approach so powerful. Although GitOps methods can be applied to other infrastructure approaches, Kubernetes is the main focus.

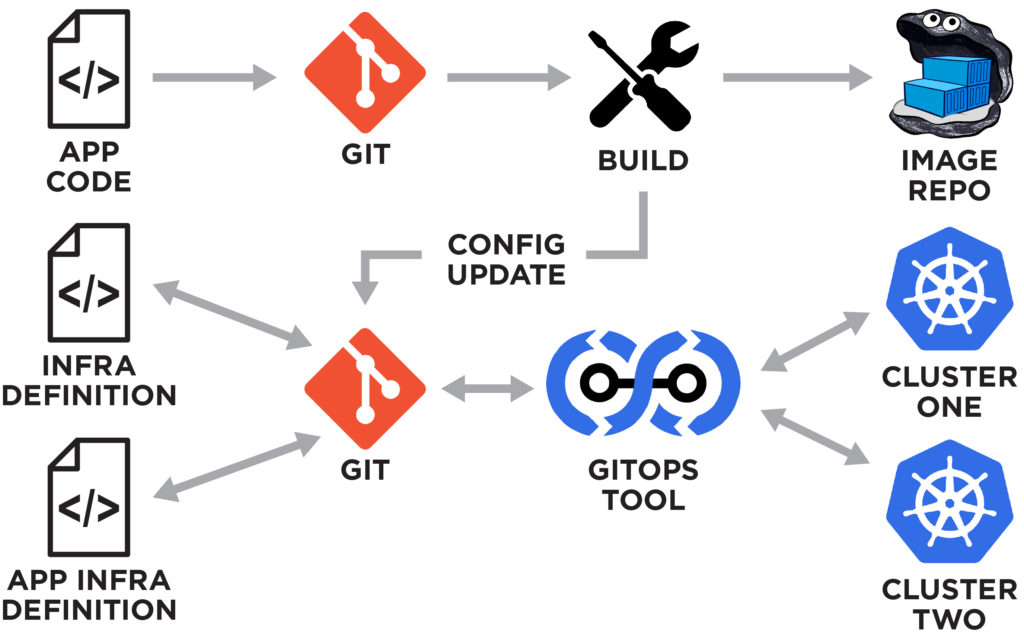

With GitOps, a Git repository stores all the information for defining, creating, and updating applications and infrastructure. By leveraging software development lifecycle principles such as version control, collaboration and compliance and applying them to infrastructure, DevOps teams can more effectively manage resources. When changes are made to a Git repository, code is pushed to (or rolled back from) the production infrastructure, thus automating deployments quickly and reliably. GitOps is really an extension of the infrastructure-as-code (IaC) concept. Using the same approach for managing infrastructure configuration files as for software code enables your team to collaborate more effectively on infrastructure changes and vet configuration files with the same rigor you apply to code.

GitOps leverages Git as a single source of truth for both infrastructure and applications. Because GitOps is declarative, it provides for better standardization, enhanced security, and improved productivity. The GitOps operating model offers teams a number of advantages:

GitOps is based on a set of core principles that are easy to understand. These principles align well with the underlying design principles of Kubernetes, which is why GitOps and Kubernetes work so well together:

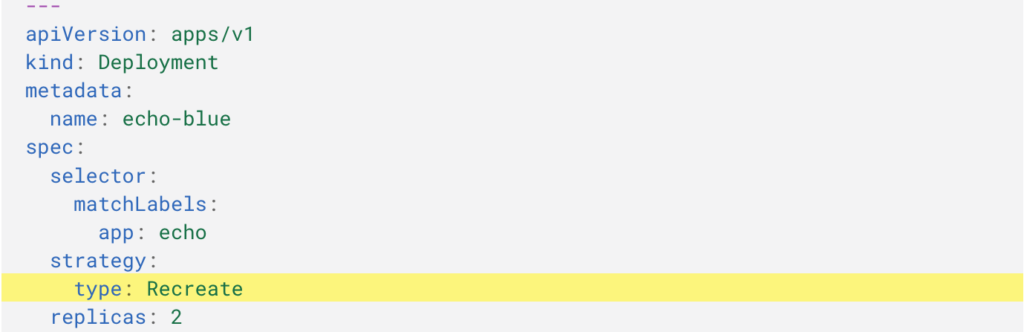

So now that you know what GitOps is and the underlying GitOps principles, how do people actually use it? One of the strengths of GitOps is that it automates infrastructure at the same time application code generates app binaries fostering better collaboration. For example, teams typically use GitOps to automate the heavy lifting required when deploying a new software feature. In addition to creating and checking in the code for the feature, you also update and check in the application manifest. Completion of these check-ins can trigger a deployment of the updated code and configuration files to the specified cluster(s), or you can trigger the deployment manually. If something goes wrong with the deployment, you can rollback to a previous state just as easily.You can control how the deployment of your application on Kubernetes is performed simply by setting the strategy for the Deployment as illustrated in the following code fragment:

Options for strategy type include:

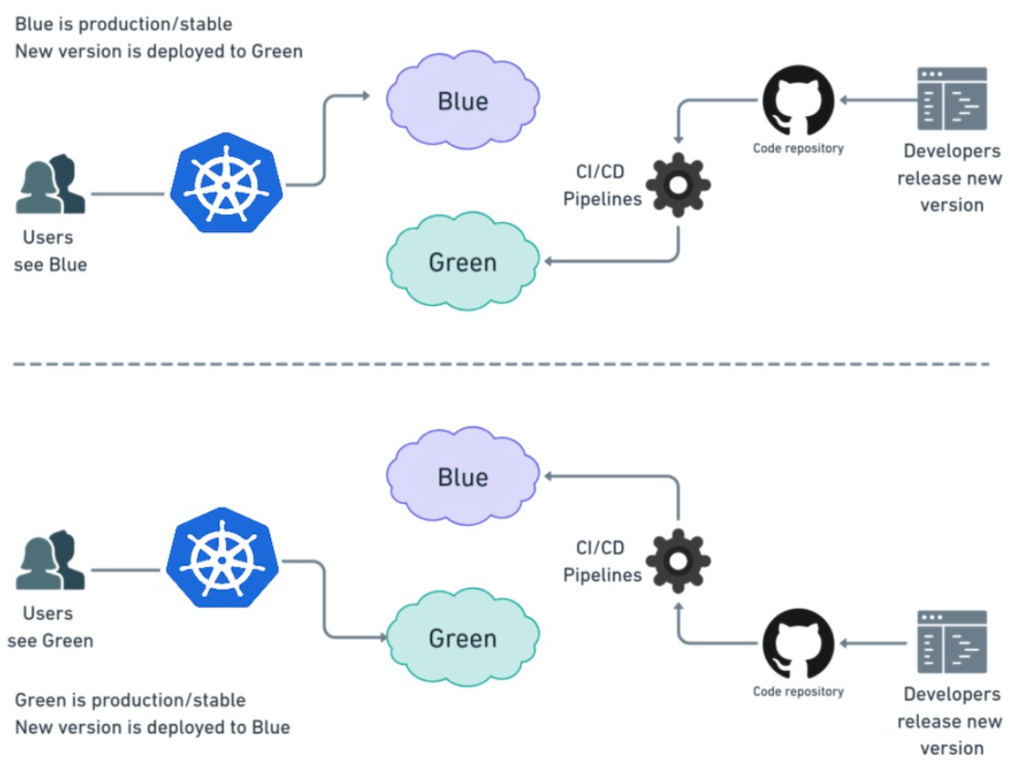

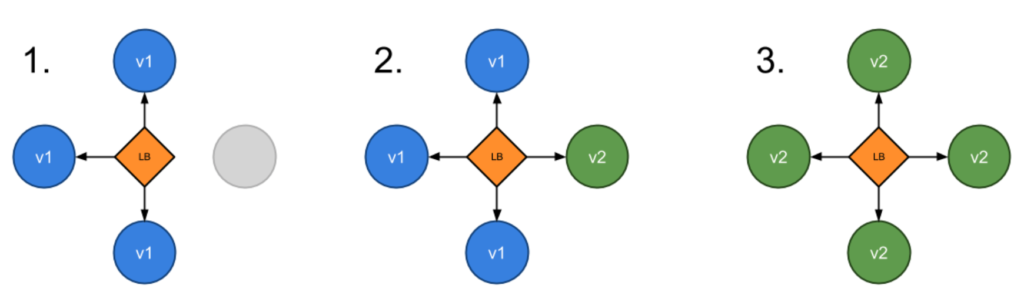

More advanced deployment patterns such as blue-green and canary deployments can be implemented with a little additional effort. Blue-green: In a blue-green deployment, there are two versions of the application, but only one version is live and accessible to users at a time. This works well for applications where a rolling update won’t work but you don’t want any downtime. This approach also enables you to roll back to the old version immediately if something goes wrong.

Canary: A canary deployment creates new pods in parallel with existing ones in the same way as a Rolling Update does, but gives you more control over the update process. This is implemented by periodically updating the application manifest to increase the number of new pods while decreasing the number of old pods. If something goes wrong with the new version, you can detect the problems without affecting too many users.

While you can implement the GitOps methodology and GitOps workflows using standard Git tools, you’ll need some additional tooling to get the full benefits, especially the ability to ensure that the desired state is maintained. Popular open-source GitOps tools that work with Kubernetes include Flux and ArgoCD. It’s worth noting that a GitOps pipeline can be pull-based or push-based. In a pull-based pipeline, a GitOps Kubernetes operator on each cluster watches for changes to the Git repository and pull them into the cluster when they occur. In the push-based approach, repository updates trigger the build and deploy pipeline to push updates to each target cluster.Pull-based GitOps pipelines have a number of advantages versus push-based:

As a rule, pull-based GitOps is more secure and active detection and remediation can also be highly beneficial.

Rafay’s GitOps Service enables continuous delivery through automated deployment, monitoring, and management of continuous deployment pipelines either as a Service or utilizing the tool of your choice, such as ArgoCD or Flux. The Rafay Kubernetes Operations Platform is a SaaS platform that works with any Kubernetes distribution, across public clouds and remote/edge locations.With Rafay’s GitOps Service, you can:

Rafay recently announced that we are open-sourcing our GitOps Service as well as our Zero-Trust Access Services as part of our commitment to open source. Ready to find out why so many enterprises and platform teams have partnered with Rafay to streamline Kubernetes operations? Sign up for a free trial today and follow our quickstart guide to see what the Rafay GitOps Service can do.

Read Now

An Operating Model for Dynamic, Distributed Kubernetes Environments Kubernetes clusters have a lot of moving parts—and so does each application running on a cluster. With frequent application and environment updates, the state of every cluster can change rapidly.

Read Now

Over the past several years we’ve experienced a tremendous amount of change in the Kubernetes management and container orchestration market. Years ago, Kubernetes was used to support a relatively small number of clusters in lab environments, handling mostly corner use cases, and seen as a simple cluster management tool that was used by DevOps and IT Ops.

Read Now