Rafay's AI and Cloud-native Blog

Explore the Latest Insights

Stay updated with our expert blog articles and insights on cloud-native and AI infrastructure management and orchestration topics.

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

No items found.

Feb 17, 2026

What Is an AI Factory? A Strategic Guide for Enterprises and Cloud Providers

Read Now

No items found.

Feb 17, 2026

Bare Metal Isn’t a Business Model: How Cloud Providers Monetize AI Infrastructure

Read Now

No items found.

Feb 17, 2026

From Tickets to Self-Service: What Developers Now Expect from AI Infrastructure

Read Now

No items found.

.png)

Feb 13, 2026

Product

Kubernetes v1.35 for Rafay MKS

Read Now

No items found.

Jan 23, 2026

Product

What is Serverless Inference? A Guide to Scalable, On-Demand AI Inference

Read Now

No items found.

.png)

Nov 24, 2025

News

Rafay at Gartner IOCS 2025: Modern Infrastructure, Delivered as a Platform

Read Now

Kubernetes

AI applications

GPU Cloud

Enterprises

.png)

Nov 10, 2025

Product

Empowering Platform Teams: Doing More with Less in the Kubernetes Era

Read Now

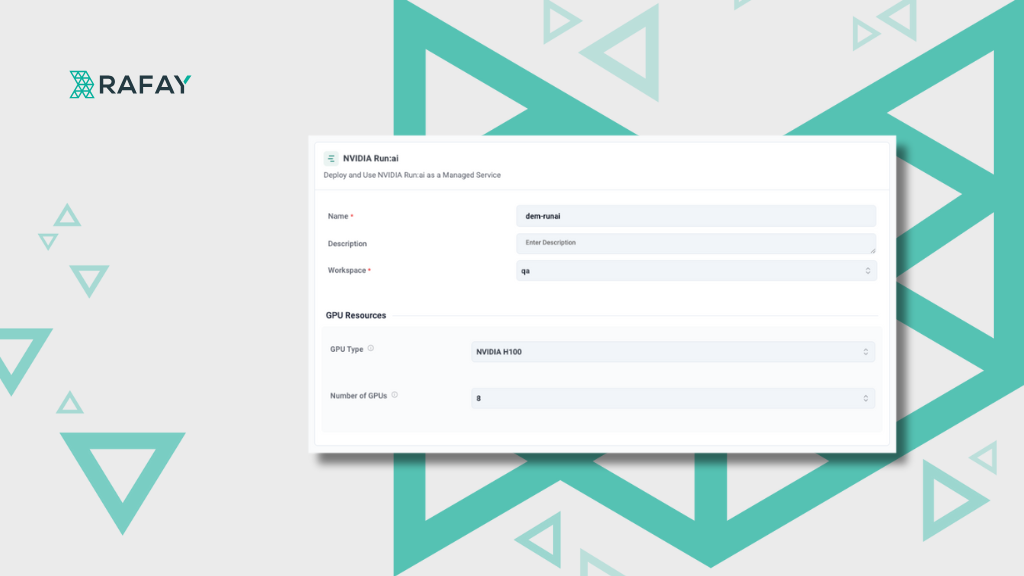

GPU PaaS

Product & integrations

GPU Cloud

Nov 2, 2025

Product

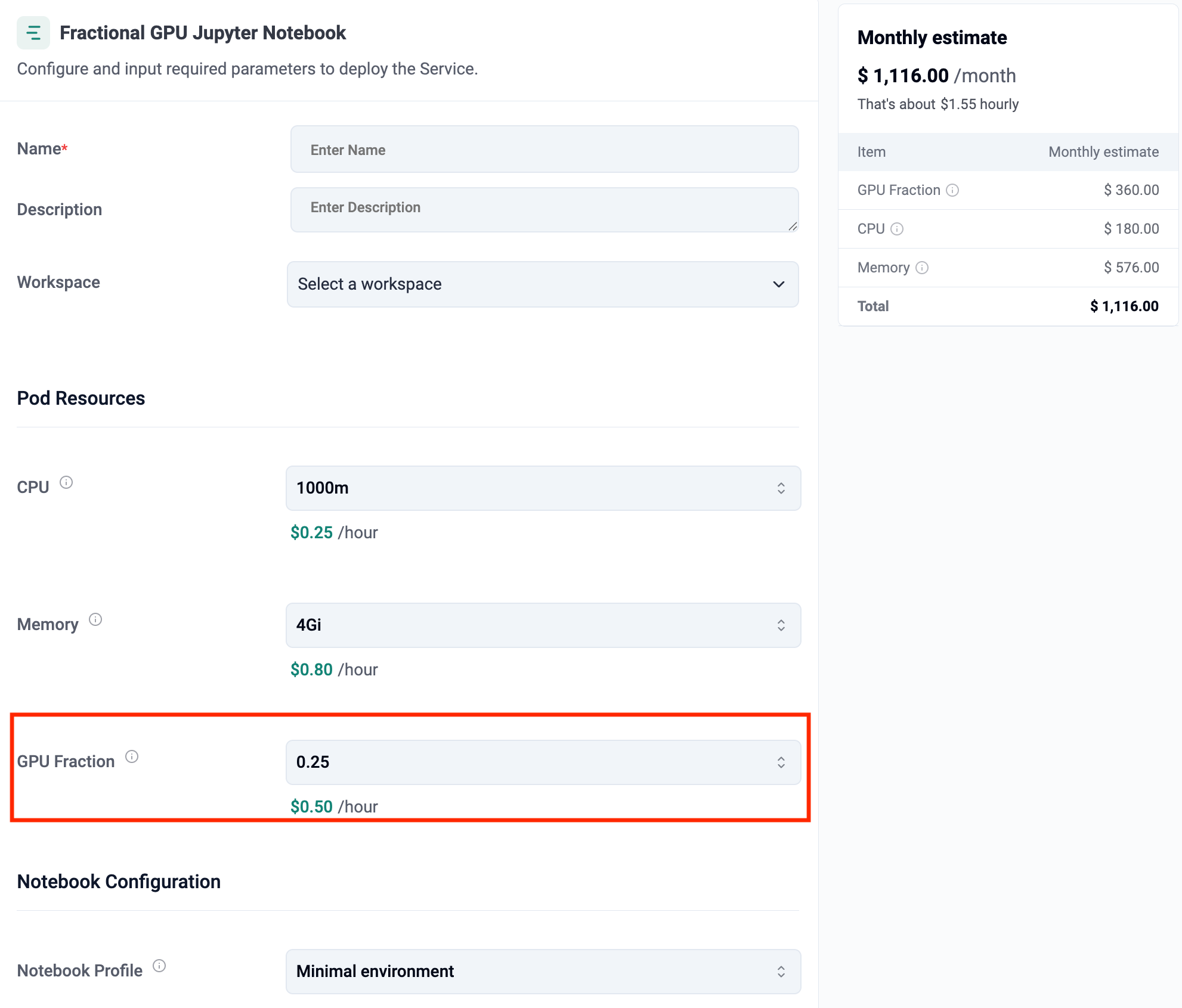

Part 2: Self-Service Fractional GPU Memory with Rafay GPU PaaS

Read Now

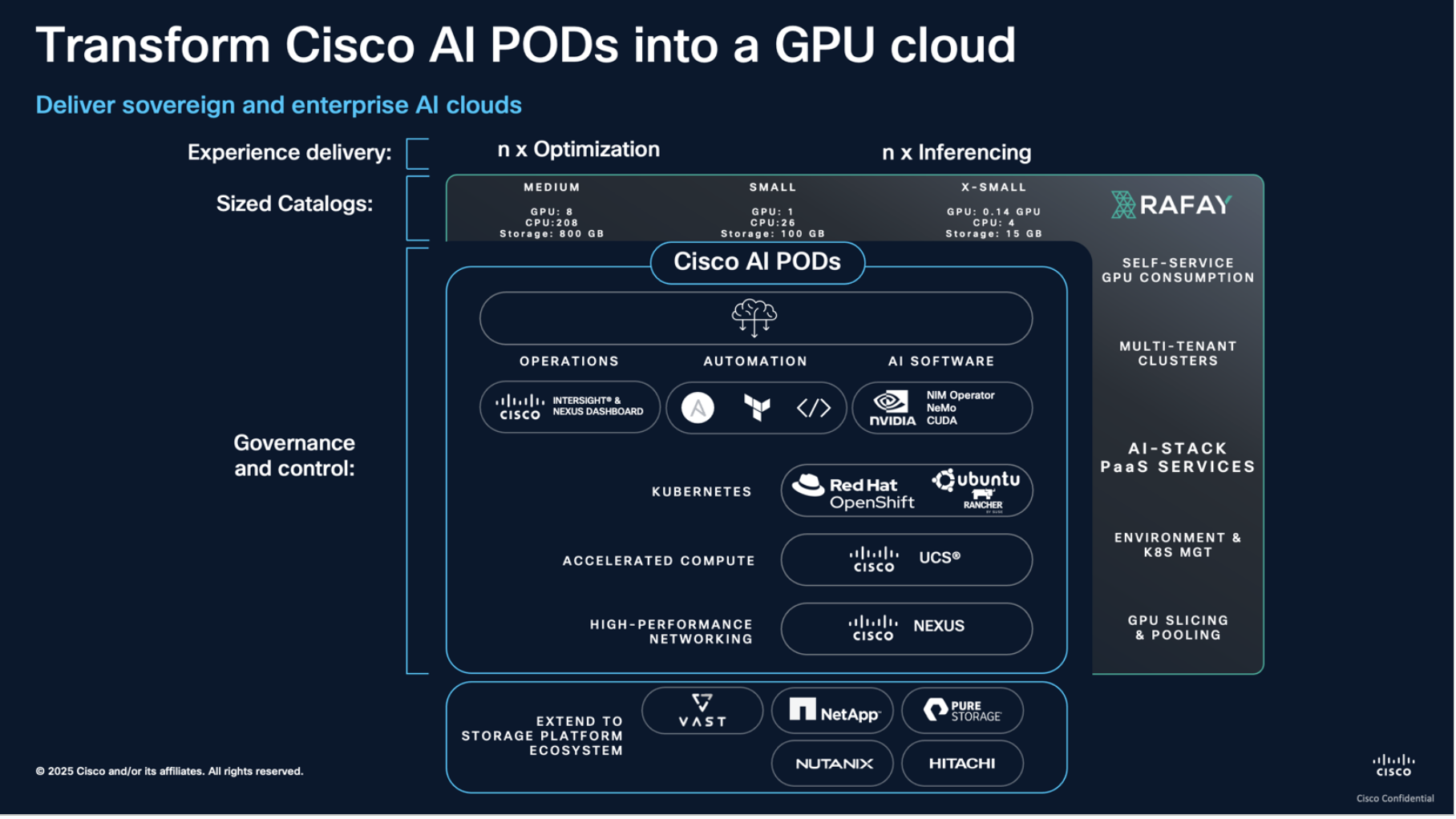

AI Infrastructure

Enterprises

GPU Cloud

GPU PaaS

Oct 20, 2025

Product

Unlock the Next Step: From Cisco AI PODs to Self-service GPU Clouds with Rafay

Read Now

No items found.

.png)

Oct 6, 2025

Product

Kubernetes v1.34 for Rafay MKS

Read Now

No items found.

.png)

Oct 6, 2025

Product

Dynamic Resource Allocation for GPU Allocation on Rafay's MKS (Kubernetes 1.34)

Read Now

No items found.

Sep 5, 2025

Product

Support for Parallel Execution with Rafay's Integrated GitOps Pipeline

Read Now

No items found.

Aug 28, 2025

What is Agentic AI?

Read Now

Trusted by leading enterprises, neoclouds and service providers

.png)

.png)

.png)

.png)